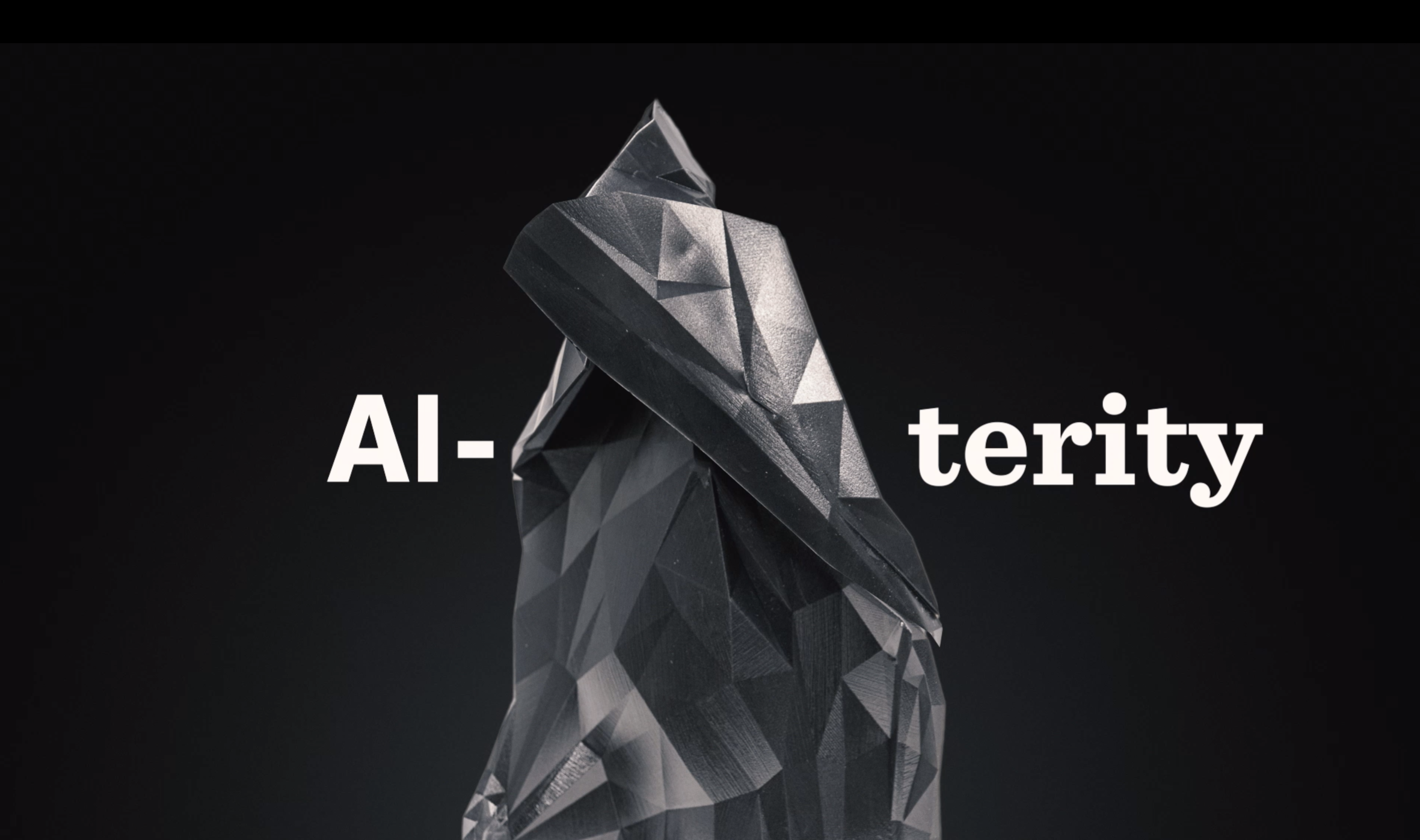

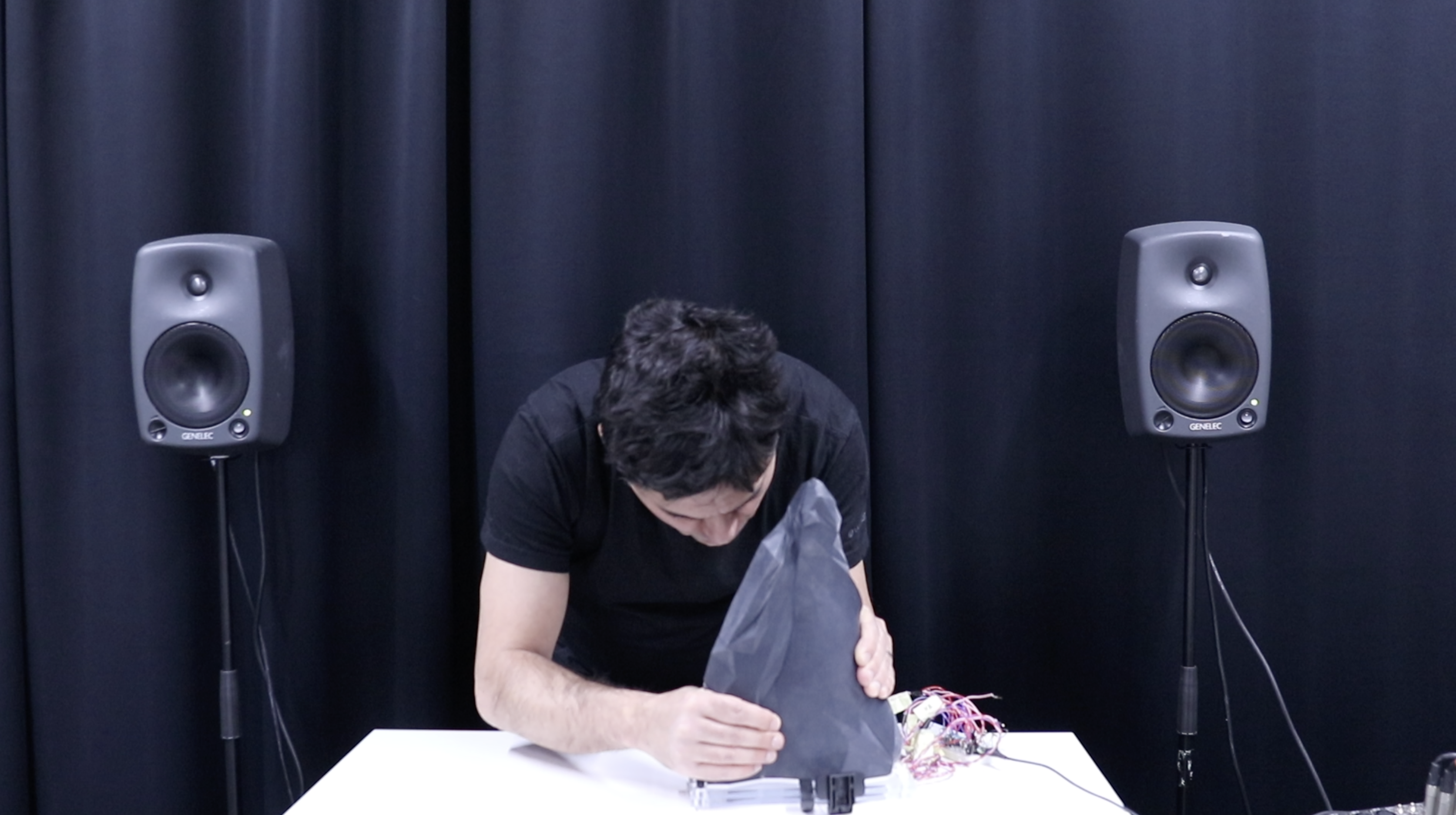

AI-terity is a deformable, non-rigid musical instrument that comprise computational features of a particular AI model for generating relevant audio samples for real-time audio synthesis.It has been our research interest to explore and integrate generative AI models applied in audio domain into development of new musical instruments. This in turn has the potential to bring an alternative synthesis of knowledge about musical instruments, as well as enhancing the ability to focus on the sounding features of the new instruments.

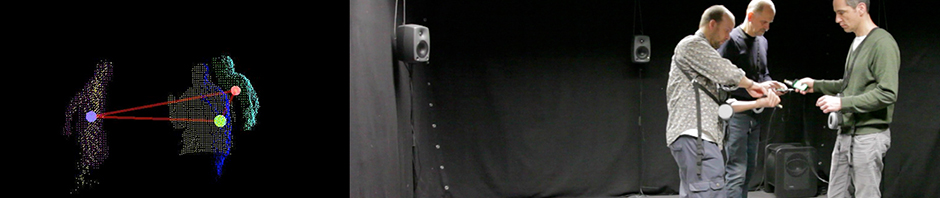

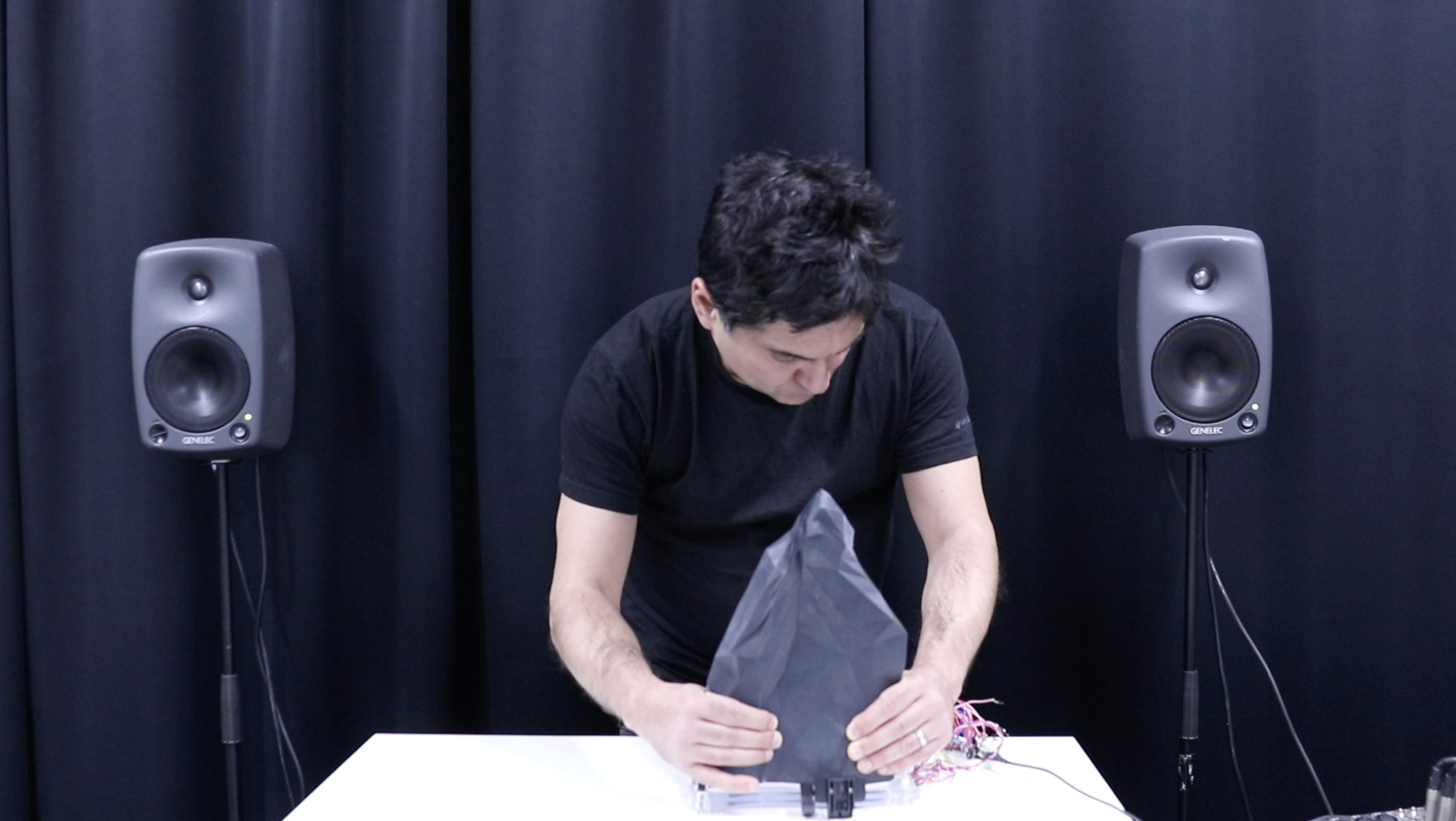

Stiffness and physical deformability becomes an opening of the instrument’s folded shape, generating audio samples when handheld physical action is applied. This physical manip- ulation causes control parameter changes in sample-based granular synthesis and new audio samples are distributed around the surface when the performer starts a direct in- teraction with the instrument. Being able to move through timber-changes in sonic space allows performer to access one way to idiomatic digital relationship with sound making and control actions with Al-terity instrument

The current developments of tools are advancing such existing models, further lead to modeling new sounds, providing possibilities to generate audio efficiently and faster but at the same time interesting results from the trained data set of samples in audio domain. Deep learning with audio shifts the focus to next level of real-time synthesis of sound by creating completely new-sounding sounds.

The Composition Uncertainty with AI-terity was performed at Ars Electronica 2020, New Interfaces for Musical Expression NIME 2021 and AI Music Creativity AIMC 2021 conferences.