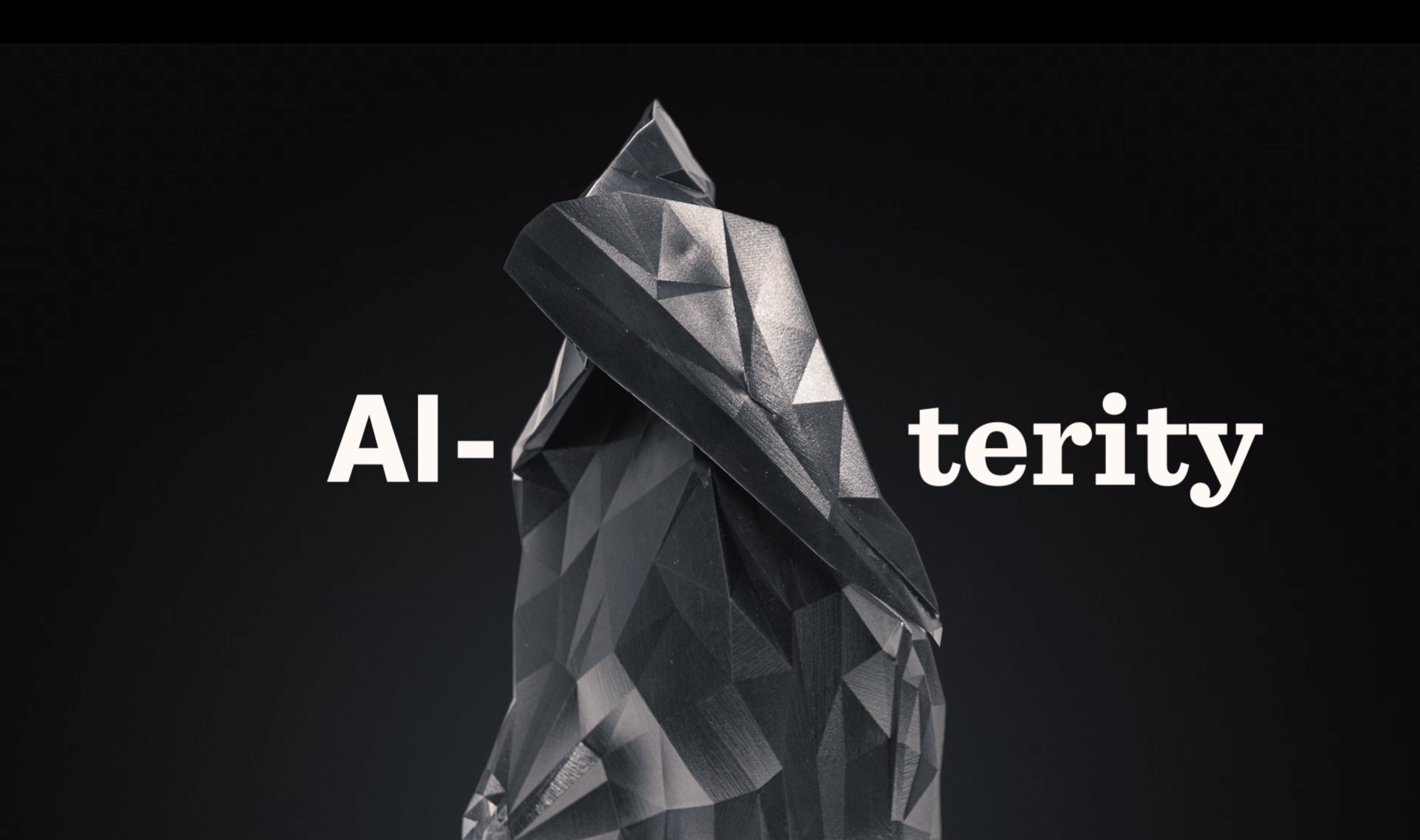

AI-terity

AI-terity is a deformable, non-rigid musical instrument that comprise computational features of GANSpaceSynth AI model for generating relevant audio samples for real-time audio synthesis.It has been our research interest to explore and integrate generative AI models applied in audio domain into development of new musical instruments, elevating our ability to focus on the sounding features of the new instruments.

2025 / DMI-P-I project

Exploring Sonic Latent Space

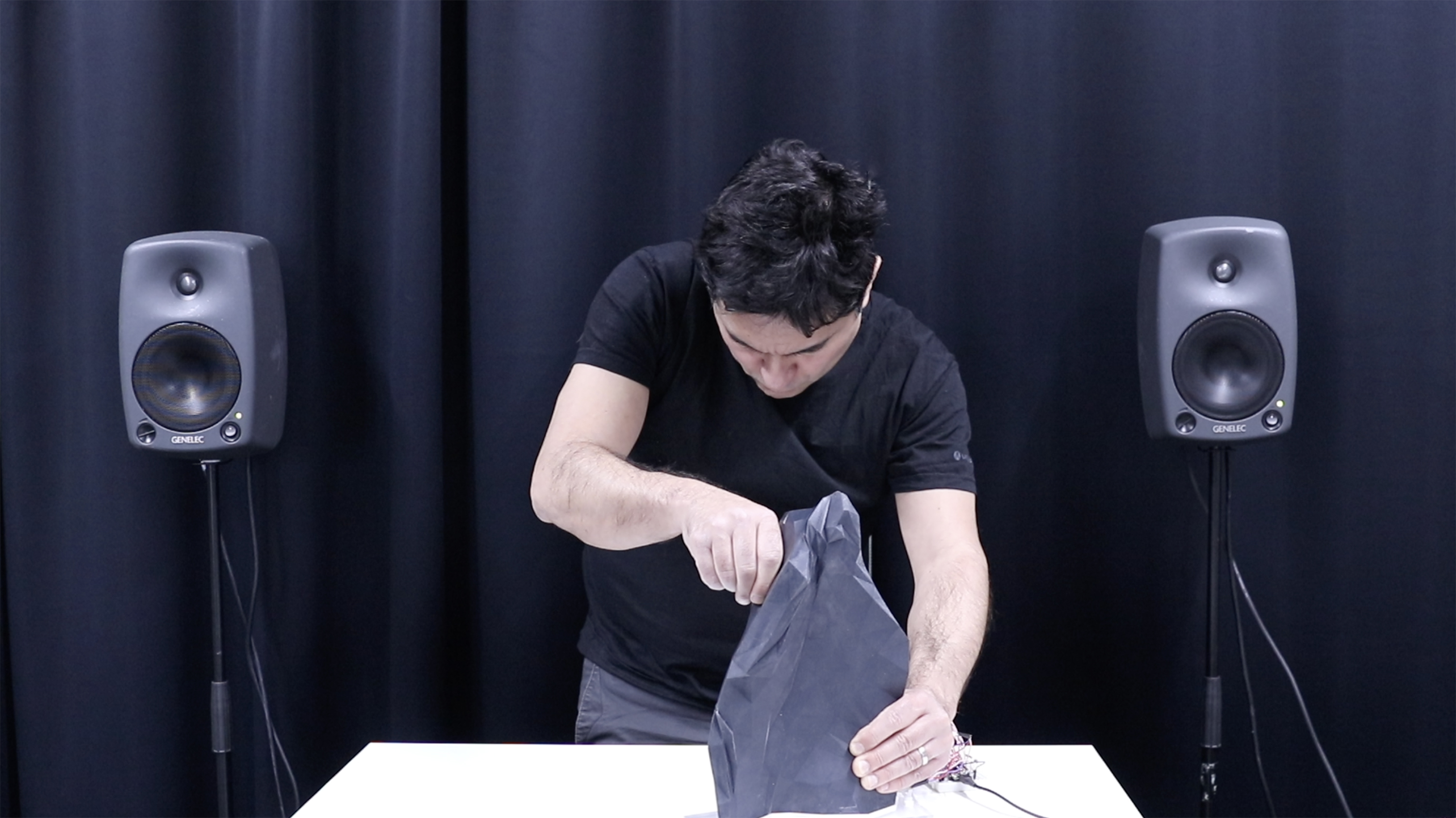

The stiffness and physical deformability of the instrument create openings in its folded shape, generating audio samples when handheld physical actions are applied. This physical manipulation changes control parameters in sample-based granular synthesis, while new audio samples are distributed across the surface as the performer directly interacts with the instrument. Navigating timbral variations in the sonic latent space enables the musician to develop an idiomatic digital relationship with sound-making and control actions using the Al-terity instrument.

Our hybrid generative adversarial network (GAN) architecture GANSpaceSynth generates new audio samples using features learned from the original dataset and has the ability to specify particular audio features to be present or absent in the generated audio samples.

Deep learning in audio

Current computational tool development is advancing existing sound synthesis models, enabling the modeling of new sounds and providing opportunities to generate audio more efficiently and quickly, while also producing interesting results from trained audio datasets. Deep learning in audio shifts the focus to the next level of real-time sound synthesis, enabling the creation of entirely new and unique sounds.

AI-terity is a new musical instrument, built and developed by Koray Tahiroğlu, Miranda Kastemaa and Oskar Koli in SOPI research group, Aalto University School of ARTS

2024 / NIME Music Performance

Communal

The composition Communal is one of a set of performances where the performer and the musical instrument work together to direct, suggest and form a transitional musical narrative. For this performance, the piece takes the form of a collective that allows us to recognise the deeper transformations in the use of AI technologies towards co-determining how music can be present for and perceived by human musicians and audience. The piece was composed using the tools we develop as part of AI-terity and GANSpaceSynth projects. In the context of new interfaces for musical expression, AI technologies serve an integral role today, offering new perspectives to experiment on the ways in which a musical instrument manifests itself in human-technology relation, finding ways to embody itself into the otherness.

Communal performance live at TivoliVredenburg - Hertz concert hall during the NIME conference 2024.

Image © Rogier Boogaard

GAN latent space exploration with the AI-terity instrument, generating mutliple audio samples, synthesising them simultaneously and synchronising grain playback in mill granular synthesis.

2023 / Sónar Festival

Music, Creativity and Technology

The AI-terity project was presented at the SONAR+D Festival, providing a research-driven contribution to artificial intelligence and musical interaction. This work introduces a novel instrument that integrates physical deformability and stiffness as core interaction modalities, enabling performers to manipulate sample-based granular synthesis through embodied gestures. By folding, pressing, and reshaping the instrument, musicians engage with a dynamically distributed latent sonic space, where sound samples are generated and organised in response to real-time physical input. The SONAR+D presentation emphasised AI-terity’s role as both a performance tool and a research platform, demonstrating how AI-driven synthesis techniques and tangible interface design can foster new idiomatic approaches to sound creation and performance practice.

SONAR+D Festival provides an international forum for critical engagement with emerging technologies in creative practice, more on artificial intelligence as a central theme in music research, performance and artistic experimentation.

AI-terity exhibited at SONAR+D Festival, showcasing a deformable musical interface that integrates AI-driven granular synthesis with embodied performance gestures, offering new approaches to exploring and navigating latent sonic spaces.

2022 / Ars Electronica Festival

Sounding Lifeworld

Studio sessions of Berke Can Özcan and Koray Tahiroğlu turned into a continuous state of playing, revealing a variety of musical demands other than the ones they had been exposed to in their music practices. These sessions provided a state of transformation to a new sounding lifeworld with non-rigid but identifiable musical events followed by ever shifting new sounds in a multidimensional latent space. The composition "Sounding Lifeworld", which has been one of the outcomes of these studio sessions, performed live at the Ars Electronica 2022, challenges AI-powered musical instruments’ potential in discovering a music performance that transcends musical expectations but still provides delicate relationships of symbiosis between human and non-human actors.

The Sounding Lifeworld explores what’s possible when an artificial intelligence model applied to a musical instrument and communicates with the musicians within a flow that is tailored to the variance and diversities of the social actions of all the actors in a new lifeworld. At its core, Ars Electronica festical live performance,l the Sounding Lifeworld, is the result of an ongoing effort in the search for a musical language with AI and human musicians.

Sounding Lifeworld performed on Wednesday Septemper, 7th 2022 at KEPLER'S GARDENS, Festival University Stage | more info

2022 / Berke Can Özcan and Koray Tahiroğlu

Studio Sessions

Since 2020, Berke Can Özcan and Koray Tahiroğlu have shared ideas and knowledge in what has become the first joint-project with a whole new range of musical possibilities. The result, in which Özcan and Tahiroğlu created several compositions using percussive drum loop modulations and an artificial intelligence (AI) model, has been explored in studio sessions in Istanbul, March 2022

Studio sessions turned into a continuous state of playing, opening up new variety of musical demands other than the ones they had been exposed to in their music practices. These sessions provided a state of transformation to a new sounding lifeworld with non-rigid but identifiable musical events followed by ever shifting new sounds with timber- changes in a multi-dimensional sonic-latent space.

Berke Can Özcan and Koray Tahiroğlu

The Sounding Lifeworld challenges AI powered musical instrument’s potential to re-shape its idiomatic features, discovering a music performance that transcends musical expectations, but still provides delicate relationships of symbiosis that appear between human or non-human actors.

The composition "Lost Wallet" was recorded live during the studio sesisions in March 2022 .

2020 / Ars Electronica Festival

Uncertainty

The composition Uncertainty keeps the musician in a hesitant state of performance, providing a non-rigid but identifiable musical events, followed by ever shifting new sounds. Uncertainty is a composition written for the AI- terity instrument that comprises computational features of a particular artificial intelligence (AI) model to generate relevant audio samples for real- time audio synthesis. The unusual behaviour of the Al-terity puts the performer in an uncertain state during performance. Together with being able to move through timbre-changes in sonic space, the emergence of new sounds allows the musician to explore a whole new range of musical possibilities. Composition turns into a continuous state of playing, reformulating an idiomatic relationship with the Al-terity and opening up a fresh variety of musical demands.

Ars Electronica is a festival platform for media arts, technology, and society, advancong critical discourse and experimentation at the intersection of artistic practice and emerging technologies. | more info

The Composition Uncertainty with AI-terity was performed at Ars Electronica 2020, New Interfaces for Musical Expression NIME 2021 and AI Music Creativity AIMC 2021 conferences.