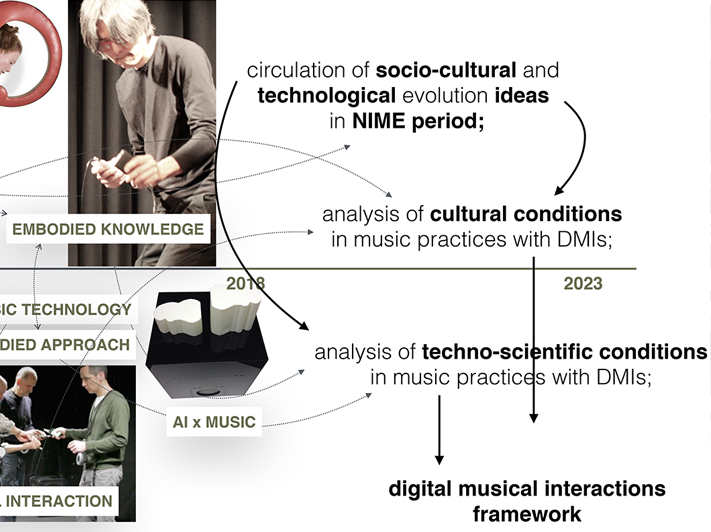

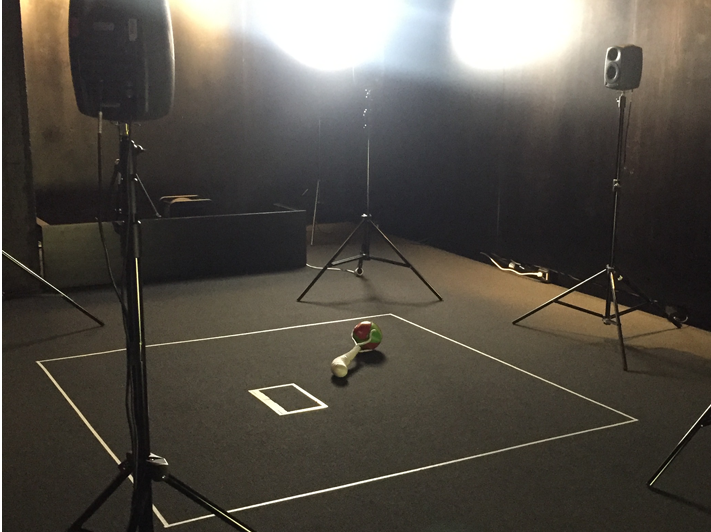

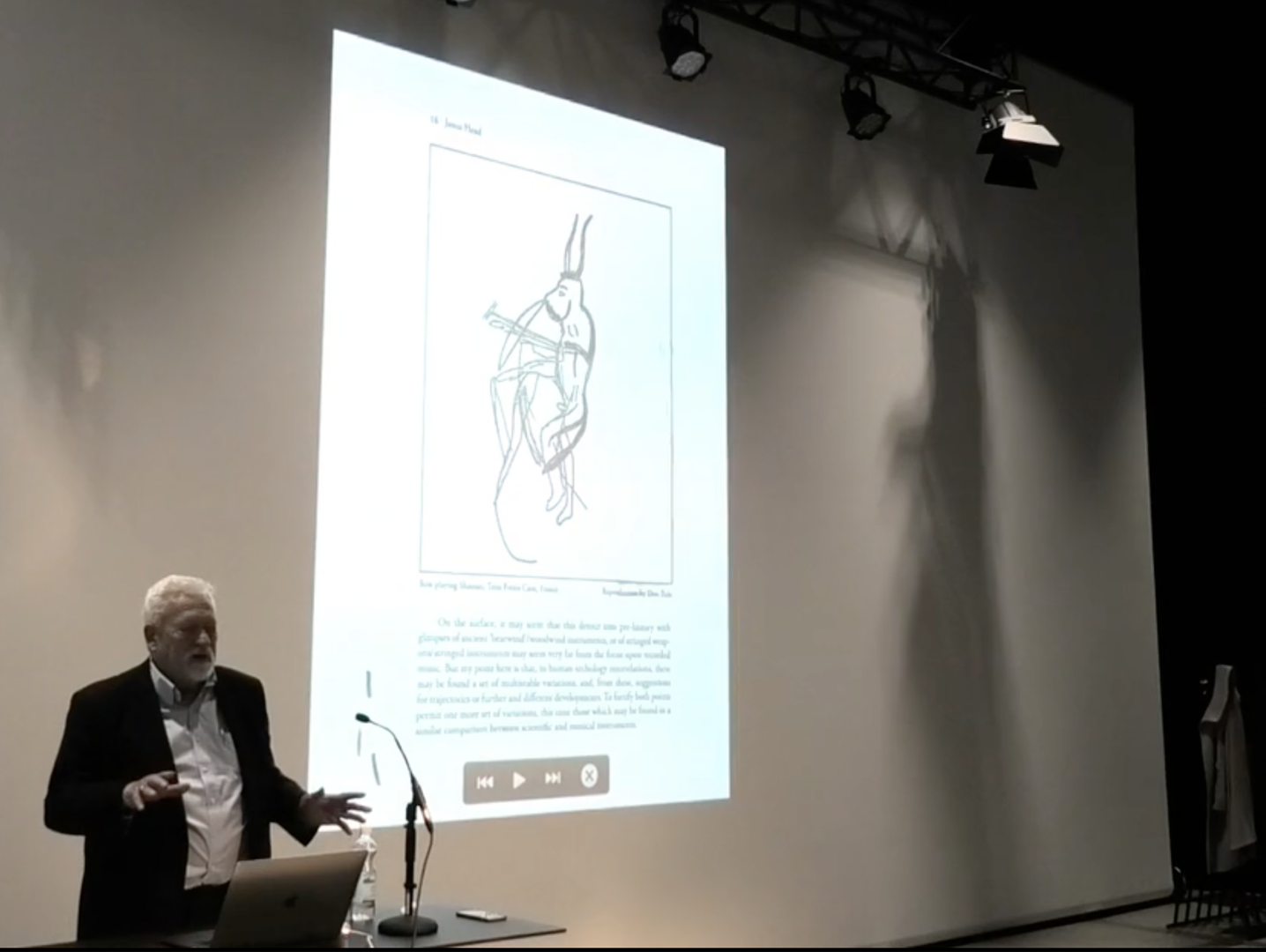

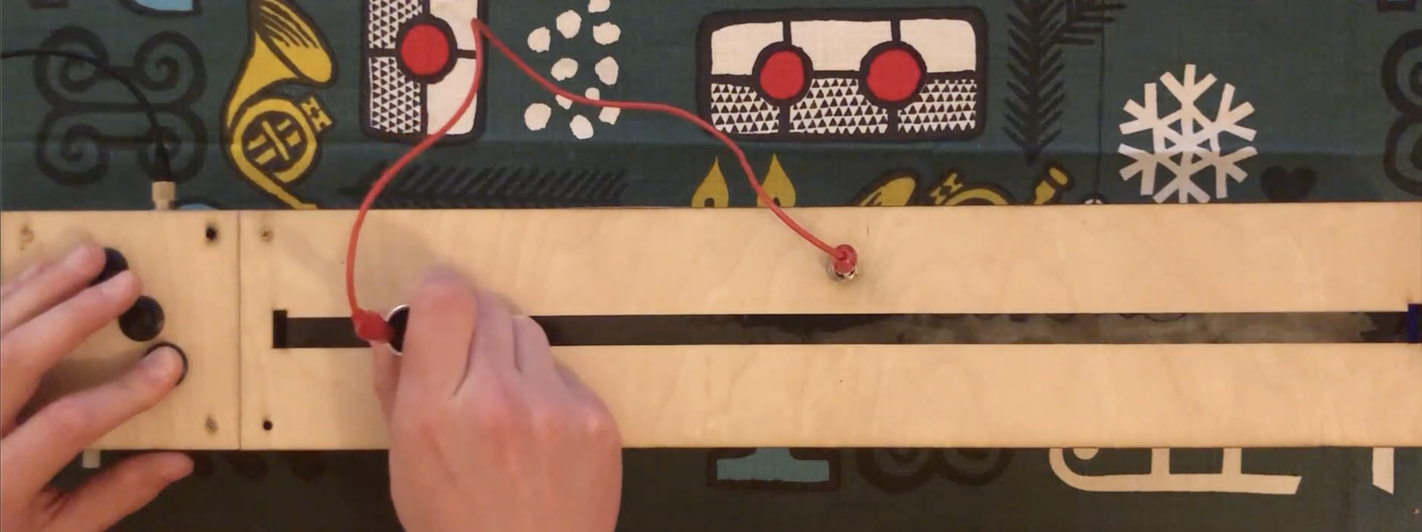

Sound and Physical Interaction (SOPI) research group's main interests are centred on the broad area of Sound and Music Computing (SMC), New Interfaces for Musical Expression (NIME) and AI and Music Creativity (AIMC). SOPI focuses on the emerging role of audio and music technologies in digital musical interactions. It includes building, implementation and performance of digital musical instruments, interactive art and audio-visual production. It is led by Koray Tahiroğlu and received funding from Business Finland, Academy of Finland, Finnish Ministry of Education and Culture’s (MEC) Global Program Pilots for India & USA, Nokia Research Center, TEKES, Aalto Tenure Committe and A!OLE Aalto Online Learning network.

CrossModal VAE; Musician in the Loop

coming soon....