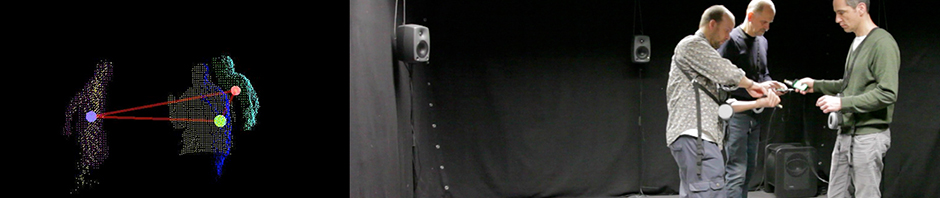

This research focuses on expressiveness of multimodal interaction. Whereas typically interaction research aims to optimize the interaction in terms of efficiency and ergonomics, here the aim is to use the interactive technologies for expressing emotions and feelings. The work continues the track initiated in the ‘Sonic Interaction’ work package on 2010/2011. One of the contributions of the package was ‘Raja’, an interactive dance performance where dancers movements were transformed into sound (sonified) in real-time. Now, the idea is to widen the design space and include several output modalities into the expression. For example, user’s (or dancer’s) body/hand movements, finger gestures and face expressions could be tracked by inertial sensors and camera. The tracked parameters could be used for creating a virtual user interface that is presented with spatial auditory cues and haptic feedback, e.g. a music player that is controlled with hand/arm gestures. Alternatively, the parameters could be transformed into aesthetic sound and haptic cues that could be presented to the audience. Hence, the work has two expected outcomes: a realistic user interface with expressive features and an interactive art performance with multimodal audience experience.

Research questions:

– How to transform measured parameters such as motion, pressure, face expressions into sound and haptic cues?

– What kind of realistic application could benefit from expressiveness?

– How to present proximity and location of virtual objects in space with auditory and tactile cues?