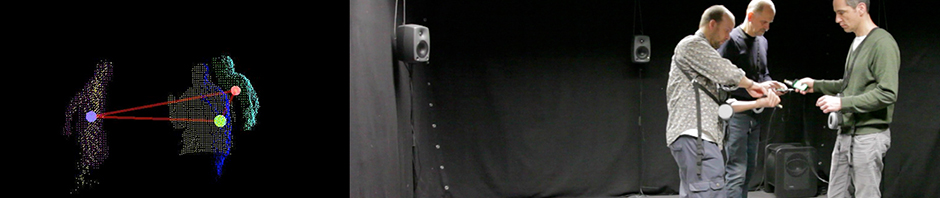

In a real-time music performance, CCREAIM listens the musician’s musical output through a musical instrument in audio domain, generates se- quences of predictions and responds in musical counteractions. In model’s architecture, VQ-VAE allows to generate diverse and good quality distribution of audio samples and Trans- former learns the correlations between audio samples and music in relation to the trained audio dataset. The novelty in this project is not only the real time performance of the model but also the visual cues that provide the musician with information about the AI model’s deci- sions; showing what part of the musician’s output neural network is looking at while making a prediction. We are using Transformer’s attention layers to visualise these cues both in real-time and offline. CCREAIM project aims to enable musicians to better understand the underlying decisions that the AI tools make and it does that by making the AI tools transpar- ent and interpretable to musicians. Being able to interpret and understand the decisions of the AI will let musicians better explain to themselves how the AI influenced the outcome and whether they considered information and made a decision based on that.